Testing without mocking frameworks.

Over the years, my coding practices have changed a lot. From hacking away until it works to TDD/BDD/DDD and everything in between. One of the biggest changes in my developer career has been when, why, and how I test my code. In particular, my view on mocking frameworks has changed a lot. A couple of years ago I was convinced I could not live without them, now I wish I could do just that.

I've come to the point where I generally advise against the use of mocking frameworks. After a couple interactions on Twitter, I thought it'd be good to share why I moved away from using mocking frameworks and what I use as an alternative.

Maintaining code that uses mocking frameworks.

Using mocking frameworks to isolate code is easy. Going down this path at the start of a project is very appealing. During this stage, code is added to the project in order to create more functionality. We create features and hashing them out quickly yields good results for the business. Our time to market is short, the overhead on introducing new capabilities is low. During this time, features tend not to be very complicated when compared to later on in the life of the application.

We're adding code, not changing it, which means we're missing part of the feedback cycle. When we create code, the context is top of mind and we don't need the code to convey a lot of intent for us to be able to do our jobs. When we change code, our work is very different from when we're creating it. We use different tools, different techniques, and we have different needs. Refactoring techniques and ease of understanding become more important. Being able to do automated refactorings, such as renames and moves, becomes vital.

Let's take a look at an example of test-code that uses a mocking framework:

/**

* @test

*/

public function testing_something_with_mocks(): void

{

$mock = Mockery::mock(ExternalDependency::class);

$mock->shouldReceive('oldMethodName')

->once()

->with('some_argument')

->andReturn('some_response');

$dependingCode = new DependingCode($mock);

$result = $dependingCode->performOperation();

$this->assertEquals('some_response', $result);

}

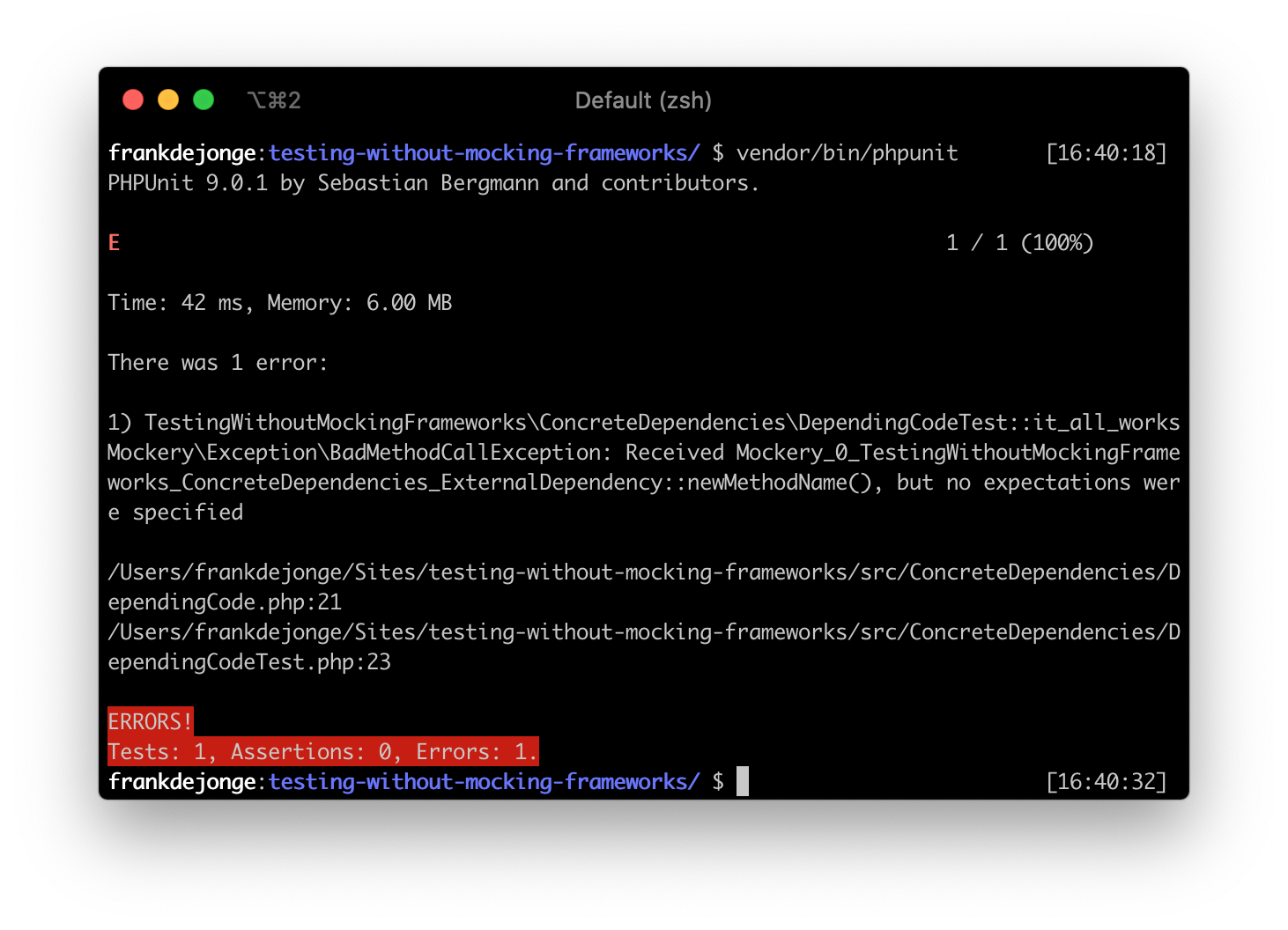

In the test-code above, a mock is being created for an ExternalDependency class. Some expectations are being set and a method response is being fixated. This is not very complicated, but what happens when the code that is tested needs to change? For example, what happens when we try to refactor this code and rename oldMethodName to newMethodName? After a standard IDE rename-refactor, our tests are broken:

IDE's, such as PhpStorm, have ways to work around this by searching for text occurrences in our code. This, however, can become unpredictable and error-prone. When more generic (or more common) terms are used for method names, these types of dynamic lookups are not as reliable. Renaming them results in long lists of occurrences that require manual checking. I've personally encountered situations where performing these manual checks were more costly than doing the method rename by hand.

While the cost of introduction (writing mocks) is low, the cost of change is a lot higher. Depending on the extent to which you use mocks, the cost of ownership can also be high. Mocking frameworks tend to be very complex and dynamic. Using them has a performance penalty. If you've ever used mocking frameworks to model more complex interactions, you'll know that these setup methods tend to grow very quickly.

So what can we do to improve our situation? First, let's turn the example into something a little more concrete.

Invoicing clients based on calculated costs.

Take a look at the following code. In this example, we're modeling an invoice service. It has one method to invoice a client, referenced by its ID, for a given invoice period.

use League\Flysystem\Filesystem;

use League\Flysystem\UnableToWriteFile;

class InvoiceService

{

private Filesystem $storage;

public function __construct(Filesystem $storage)

{

$this->storage = $storage;

}

public function invoiceClient(string $clientId, InvoicePeriod $period): void

{

$cost = $this->calculateCosts($clientId, $period);

$invoice = new Invoice($clientId, $cost, $period);

try {

$path = '/invoices/' . $clientId . '/' . $period->toString() . '.txt';

$this->storage->write($path, json_encode($invoice));

} catch (UnableToWriteFile $exception) {

throw new UnableToInvoiceClient(

"Unable to upload the invoice.", 0, $exception);

}

}

private function calculateCosts(string $clientId, InvoicePeriod $period): int

{

return 42;

}

}

So, how can we test this code? Let's first test this with mocks.

use League\Flysystem\Filesystem;

use PHPUnit\Framework\TestCase;

class TestInvoiceServiceWithMocksTest extends TestCase

{

/**

* @test

*/

public function invoicing_a_client(): void

{

// Need to work around marking this test as risky

$this->expectNotToPerformAssertions();

$mock = Mockery::mock(Filesystem::class);

$mock->shouldReceive('write')

->once()

->with('/invoices/abc/2020/3.txt', '{"client_id":"abc","amount":42,"invoice_period":{"year":2020,"month":3}}');

$invoicePeriod = InvoicePeriod::fromDateTime(DateTimeImmutable::createFromFormat('!Y-m', '2020-03'));

$invoiceService = new InvoiceService($mock);

$invoiceService->invoiceClient('abc', $invoicePeriod);

}

protected function tearDown(): void

{

Mockery::close();

}

}

When I look at a setup like this, a couple things draw my attention. Apart from having to trick PHPUnit into marking this test as "not risky", the things that I want to focus on are:

- Amount of mocking code vs other code.

- High coupling between test and implementation.

- The test does not describe desired behavior, it validates implementation.

1. Amount of mocking code vs other code.

In this test case, although pretty simple, the setup code for mocks already outweighs the rest of the test code. In more complex testing setups, the amount of mocking code explodes. This hurts our ability to reason about them since bigger tests are generally more difficult to understand.

2. High coupling between test and implementation.

The test setup is very coupled to the implementation details of the class we're testing. The test needs to know how the invoice is being serialized into JSON to make the right assertions. Whenever the internal implementation changes, the tests need to change as well. If we want to rename a field in the stored JSON, we'd have to mirror that change in the tests. This makes a test fragile. Stable tests, on the other hand, allow you to refactor the internals of the implementation without the need to "fix the test".

3. The test does not describe desired behavior, it validates implementation.

When I started out writing tests, I was really focused on testing code. Only later, I realized my tests were functional descriptions of how I wanted my code to behave. This totally changed the way I perceived writing tests. It was this perspective that steered me in a direction that made my code more stable, my tests less fragile, and increased the amount of confidence the tests gave me and my co-workers. The intent of the example test above is not as clear as it could be.

You could argue that the communication between objects is, or should be, part of the specification. I'd argue there are other ways to test this which provide more assurance than mocks do. I'll elaborate on this later in the post.

Improving our test-case.

Let's look into a couple concrete ways we can improve our test-case. First up, removing the mock. This code uses Flysystem, so we can just use an in-memory filesystem implementation for our tests.

/**

* @test

*/

public function invoicing_the_client(): void

{

// Arrange

$storage = new Filesystem(new InMemoryFilesystemAdapter());

$invoicePeriod = InvoicePeriod::fromDateTime(DateTimeImmutable::createFromFormat('!Y-m', '2020-03'));

$invoiceService = new InvoiceService($storage);

// Act

$invoiceService->invoiceClient('abc', $invoicePeriod);

// Assert

$expectedContents = '{"client_id":"abc","amount":42,"invoice_period":{"year":2020,"month":3}}';

$this->assertTrue($storage->fileExists('/invoices/abc/2020/3.txt'));

$this->assertEquals($expectedContents, $storage->read('/invoices/abc/2020/3.txt'));

}

Now, this code interacts with a real implementation. In the Flysystem test suite, the in-memory implementation is tested against the same set of tests as any other implementation provided. This allows us to safely interchange these implementations.

The new setup also follows the AAA-pattern: Arrange, Act, Assert. This pattern, when consistently used, makes a test suite very predictable. Setups that use mocking frameworks tend to mix the arrange and assert sections, which makes the test less clear.

Even though we've been able to remove the use of a mocking framework and our tests more readable, we can still improve. Our test is still aware of the underlying implementation details. The fact that we need to write JSON in this test is a great indicator.

Defining meaningful boundaries.

At the start of a project, code is added to a project. During this stage, certain feedback loops are missing. Only when projects mature, we'll be faced with the consequences of the action taken by our past selves. Code that is difficult to maintain has a high cost of change. This code often had a low cost of introduction. This is OK because it is very hard to define all the correct boundaries at the start of a project.

Certain boundaries only become clear after getting deeper insights into the domain model and/or problem space. Naive assumptions are made, resulting in tighter coupling. When you encounter tests that are difficult to maintain, it may be the right time to create better, more meaningful, boundaries.

In our invoicing example, my imaginary domain experts have told me that invoices need to be submitted to an invoice portal. The portal is able to let you know whether or not a particular invoice was submitted. Let's turn that into an interface:

interface InvoicePortal

{

/**

* @throws UnableToInvoiceClient

*/

public function submitInvoice(Invoice $invoice): void;

public function wasInvoiceSubmitted(Invoice $invoice): bool;

}

This interface represents the needs of the domain. We can now create a test-case that ensures that any implementations work as expected.

use DateTimeImmutable;

use PHPUnit\Framework\TestCase;

abstract class InvoicePortalTestCase extends TestCase

{

abstract protected function createInvoicePortal(): InvoicePortal;

/**

* @test

*/

public function submitting_an_invoice_successfully(): void

{

// Arrange

$invoicePortal = $this->createInvoicePortal();

$invoicePeriod = InvoicePeriod::fromDateTime(DateTimeImmutable::createFromFormat('!Y-m', '2020-03'));

$invoice = new Invoice('abc', 42, $invoicePeriod);

// Act

$invoicePortal->submitInvoice($invoice);

// Assert

$this->assertTrue($invoicePortal-> wasInvoiceSubmitted($invoice));

}

/**

* @test

*/

public function detecting_if_an_invoice_was_NOT_submitted(): void

{

// Arrange

$invoicePortal = $this->createInvoicePortal();

$invoicePeriod = InvoicePeriod::fromDateTime(DateTimeImmutable::createFromFormat('!Y-m', '2020-03'));

$invoice = new Invoice('abc', 42, $invoicePeriod);

// Act

$wasInvoiceSubmitted = $invoicePortal->wasInvoiceSubmitted($invoice);

// Assert

$this->assertFalse($wasInvoiceSubmitted);

}

/**

* @test

*/

public function failing_to_submit_an_invoice(): void

{

// ...

}

}

This abstract test-case allows us to re-use the test setup for any number of implementations of the invoice portal interface. You could say this test case is a shared contract for the interface implementations.

Here is a Flysystem-based implementation:

use League\Flysystem\Filesystem;

use League\Flysystem\InMemory\InMemoryFilesystemAdapter;

class FlysystemInvoicePortalTest extends InvoicePortalTestCase

{

protected function createInvoicePortal(): InvoicePortal

{

return new FlysystemInvoicePortal(new Filesystem(new InMemoryFilesystemAdapter()));

}

}

// --------- NEW FILE --------- //

use League\Flysystem\FilesystemOperationFailed;

use League\Flysystem\FilesystemOperator;

use League\Flysystem\UnableToReadFile;

class FlysystemInvoicePortal implements InvoicePortal

{

private FilesystemOperator $filesystem;

public function __construct(FilesystemOperator $filesystemWriter)

{

$this->filesystem = $filesystemWriter;

}

public function submitInvoice(Invoice $invoice): void

{

$json = json_encode($invoice);

$path = sprintf('/invoices/%s/%s.txt', $invoice->clientId(), $invoice->invoicePeriod()->toString());

try {

$this->filesystem->write($path, $json);

} catch (FilesystemOperationFailed $exception) {

throw new UnableToInvoiceClient("Unable to upload invoice to portal", 0, $exception);

}

}

public function wasInvoiceSubmitted(Invoice $invoice): bool

{

$path = sprintf('/invoices/%s/%s.txt', $invoice->clientId(), $invoice->invoicePeriod()->toString());

try {

$contents = $this->filesystem->read($path);

} catch (UnableToReadFile $exception) {

return false;

}

$storedInvoice = Invoice::fromJsonPayload(json_decode($contents, true));

// Compare by value for VO equality.

return $invoice == $storedInvoice;

}

}

Creating your own fakes.

Now that we've got an interface, an abstract test-case, we can create a fake implementation. A fake is a type of test double that is created specifically for testing.

The implementation above is probably simple enough to be used in other test-cases. However, if this was a little more complex, or had any network interaction. Let's look at how we can create a fake implementation:

class FakeInvoicePortalTest extends InvoicePortalTestCase

{

protected function createInvoicePortal(): InvoicePortal

{

return new FakeInvoicePortal();

}

}

// --------- NEW FILE --------- //

class FakeInvoicePortal implements InvoicePortal

{

private array $submitInvoices = [];

/**

* @inheritDoc

*/

public function submitInvoice(Invoice $invoice): void

{

$this->submitInvoices[] = $invoice;

}

public function wasInvoiceSubmitted(Invoice $invoice): bool

{

return in_array($invoice, $this->submitInvoices, false);

}

}

This fake implementation is tested using the same abstract test-case. This means we can trust it'll behave according to the same rules defined for the actual implementation.

With this fake in place, we can now, once again, test our invoice service. Giving us the final result:

use PHPUnit\Framework\TestCase;

class InvoiceServiceTest extends TestCase

{

/**

* @test

*/

public function invoicing_a_client_successfully(): void

{

// Arrange

$invoicePortal = new FakeInvoicePortal();

$invoiceService = new InvoiceService($invoicePortal);

$invoicePeriod = InvoicePeriod::fromDateTime(DateTimeImmutable::createFromFormat('!Y-m', '2020-03'));

// Act

$invoiceService->invoiceClient('abc', $invoicePeriod);

// Assert

$expectedInvoice = new Invoice('abc', 42, $invoicePeriod);

$this->assertTrue($invoicePortal-> wasInvoiceSubmitted($expectedInvoice));

}

}

What did we achieve?

The tests now follow the AAA-pattern and no longer depend on a mocking framework. Apart from this, there are several benefits to using this approach:

- The tests no longer contain implementation details.

Now that theInvoiceServicedepends on anInvoicePortal, we no longer see any Flysystem related code in the tests. This improves the stability of the tests. Any implementation of theInvoicePortalcan freely be refactored without the need to change the invoice service tests. - Clearer high-level code.

TheInvoiceServicehas now become more domain-oriented. A rich domain layer conveys more information to the reader of the code. This might be your co-worker, but it could very well be you two months from now. - Predictable IDE refactors.

Without mocking frameworks, the type-system can do its job once again. IDEs are able to perform refactoring operations without having to go into the dark art of looking up magic string references. - Low-complexity fakes are easy to control.

The fake implementation of ourInvoicePortalinterface is very simple. We can add methods to influence its behavior. An example of this would be staging exceptions or fixating responses.

More examples.

I've created an example repository you can check out to see how you can expect exception in tests or how you can create contract tests for failure scenarios.

Checkout the example repository if you want to dive in a little deeper. I hope you enjoyed this post, until next time!

Want to read more about this subject?

-

The 5 types of test doubles and how to create them in PHPUnit

In this post, Jessica Mauerhan explains the different types of test doubles. She takes an alternate perspective on the matter that I'm sure you'll appreciate. -

Mocks Aren't Stubs

In 2007, Martin Fowler wrote down his thoughts on the use of mocking frameworks, the differences between classic TDD and mockist TDD, and other aspects of writings tests.

Photo credits: Larry Imes